crisis reduced in 17% of cases. Meanwhile, their perception of risk increased in 8% of cases. Clinicians rated the value of the risk predictions for mitigating the risk of crisis and for managing the caseload priority on the second feedback form (F2 in Table 2). The completion rate for F2 was 84% (n = 846) (see Table 1 for a detailed breakdown of the teams). Five months after the study started, semi-structured interviews were conducted to obtain additional insights into the algorithm’s implementation and the effect on decision-making in clinical practice (see the qualitative report in Supplementary Materials–Qualitative Evaluation).

Mitigating the risk of crisis

We evaluated the opportunity to mitigate the risk of a crisis using two questions that probed whether the algorithm helped identify patient deterioration and enabled a pre-emptive intervention to prevent a crisis. Predictions were rated useful in 64% (n = 602) of the presented cases overall and in more than 70% of cases in three of the four CMHTs. Only one CMHT (Team 4 in Table 2) reported no added value at a high percentage (71%; n = 145), with all other teams reporting percentages below 30%. Notably, CMHTs reported that the model was clinically valuable in terms of preventing a crisis in 19% (n = 175) of cases and in terms of identifying the deterioration of patient conditions in 17% (n = 159) of cases.

Managing the caseload

The value of our tool for managing caseload priorities was indirectly captured by analyzing whether risk predictions helped clinicians identify patient deterioration and decide which patients to contact. Managing caseload priorities is a complex task (especially in high-demand settings), and clinicians often rely on various parameters to prioritize caseloads, including prior knowledge about individual patients, subjective views about risks and diagnosis severity. Accordingly, we opted to capture the value of risk predictions using a general question that prompts clinicians to directly rate the value of the predictive tool for managing their caseload, with the responses indicating that the model output was used to manage caseload priorities in 28% (n = 268) of cases (see Table 2 for a detailed summary).

Discussion

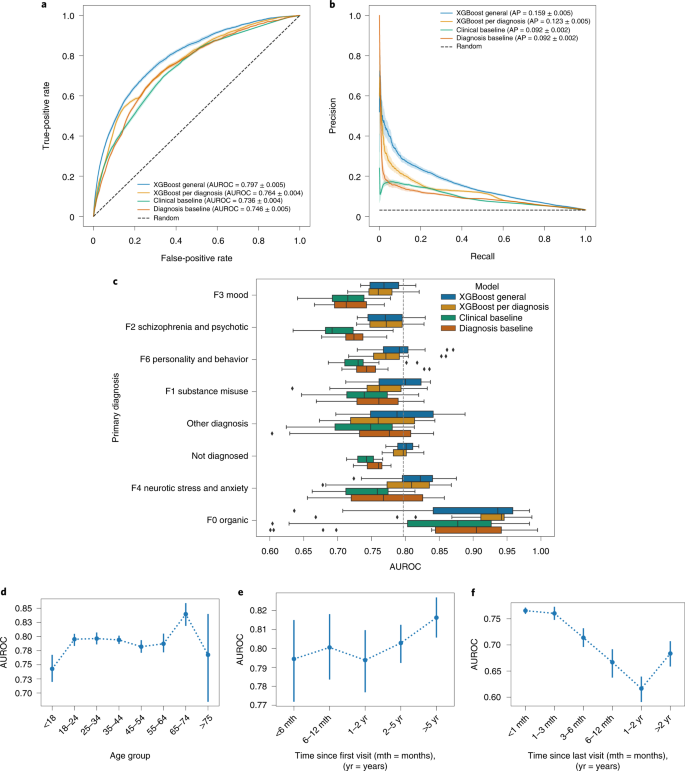

We have demonstrated the feasibility of predicting mental health crises by applying machine learning techniques to longitudinally collected EHR data, obtaining an AUROC of 0.797 for the general model. Despite the data availability concerns associated with the EHR (related to periods with no patient records), querying the prediction model continuously—that is, in a rolling window manner—produced a better performance than that obtained by the baseline models. The lack of records for more than 3 months resulted in a 7% drop in AUROC. Meanwhile, having no records about a patient for more than 6 months or 1 year contributed to drops of 13% and 20%, respectively. Unsurprisingly, having a longer data history improved the risk prediction performance for a given patient.

Among the machine learning models evaluated, XGBoost demonstrated the best overall performance. Nonetheless, in a few cases, there were only marginal or no significant improvements in comparison to other techniques (Extended Data Figs. 3 and 4). Training different models for each group of disorders to leverage the specificity of mental health disorders did not prove superior to the general model despite the differences in the performance of the general model for different disorders (Fig. 3c). No significant difference in performance was observed across different diagnostic groups, except for increased performance for organic disorders (likely due to their lower prevalence). We further expanded the subgroup analysis to assess the algorithm’s fairness. Among the common protected attributes (namely, gender, age, ethnic groups and disability), we observed a 5% increase in the AUROC for patients aged 65–74 years (likely a consequence of the considerably lower prevalence of this group) and a 7% lower AUROC for the ‘Black’ ethnic subgroup compared to the ‘White’ ethnic subgroup. We refrained from unpacking the potential causes of this disparate effect due to the complexity of known and unknown biases and factors that could not be controlled for (see Supplementary Materials–Fairness Analysis).

We evaluated whether a tool predicting and presenting risk of mental health crisis provides added value for clinical practice in terms of managing caseloads and mitigating the risk of crisis. On average, the CMHTs disagreed with only 7% of the model predictions, with the model outputs found to be clinically useful in 64% of individual cases. We did not successfully identify why considerably lower scores were observed in the responses from one of the four CMHTs, with neither the study process nor team and patient selection introducing any known bias. However, crucially, risk predictions were relevant to preventing crises in 19% of cases, to identifying the deterioration of a patient’s condition in 17% of cases and to managing caseload priorities in 28% (n = 268) of cases. Notably, the importance of the algorithm for identifying at-risk patients who would otherwise have been missed emerged from the semi-structured interviews conducted with the clinicians as part of the qualitative evaluation (see Supplementary Materials–Qualitative Evaluation). The relatively high percentage of cases (36%) in which predictions were not perceived as useful was substantially affected by the number of serious cases that were already being recognized and managed by the CMHTs. Nevertheless, the clinicians opted to receive the list of patients at the highest risk of experiencing a crisis even if doing so would mean including patients whom they were already monitoring. It is reasonable to expect that the requirements for the practical implementation would not be considerably different in other clinical settings. That is, broadening the prediction list to all patients registered in the hospital system would reduce the value of each prediction relative to clinician caseload, thus having little benefit.

Our study’s main limitation concerns the known and potentially unknown specificity of the single-center cohort. Given that EHRs are characterized by high dimensionality and heterogeneity, risk prediction algorithms suffer from overfitting the model to the data, which limits the generalizability of the results and undermines most predictive features. However, many data fields are expected to be routinely captured by typical mental health centers, even if they only register crisis emergencies, visits and hospitalizations. Based on this understanding, we selected only eight of the top 20 features derived solely from events related to crises, contacts and hospitalization (see the list in Supplementary Material–Crisis Prediction Model) and evaluated the corresponding model. The resulting AUROC was 0.781 (compared to 0.797 for the general model). Furthermore, we limited our algorithm’s applicability to patients with a history of relapse, a decision that was based on healthcare demand: patients prone to relapse require a considerable proportion of healthcare resources because they frequently need urgent and unplanned support, which engenders major challenges for optimizing healthcare resources. Thus, further research should probe the feasibility of developing an algorithm to detect first crises. Finally, although the clinicians reported that the prediction model helped to prevent a crisis in 19% of cases, this eventuality was not witnessed because it would have implied that the clinicians did not react to the predictions, which would have been ethically and legally unacceptable.

Machine learning techniques trained on historical patient records have demonstrated considerable potential to predict critical events in different medical domains (for example, circulatory failure, diabetes and cardiovascular disorders)11,12,13,14,15. In the mental health domain, prediction algorithms have typically focused on detecting individual propensity to die by suicide or develop psychosis, with no extant studies attempting to continuously detect important mental health events or those that would require readmission for urgent care or hospitalization. Nonetheless, several studies have considered predictions of unplanned hospital readmissions regardless of their underpinning reason17,43,44,45,46 and obtained AUROCs between 0.750 and 0.791 for predicting the risk of readmission within 30 days (similar to our results of 0.797 within 28 days). Although such algorithms can importantly benefit healthcare, their potential to improve caseload management or prevent unwanted health outcomes is limited by (1) the timing of queries (only at discharge rather than continuously) and (2) the nature of readmissions (not specific to any disorder in particular; as highlighted by the authors46 and the literature47, most such readmissions are not preventable). Running predictions continuously13,14 provides an updated risk score based on the latest available data, which typically contains the most predictive information, which is, in the case of mental health, crucial to improving healthcare management and outcomes.

The rising demand for mental healthcare is increasingly prompting hospitals to actively work on identifying novel methods of anticipating demand and better deploying their limited resources to improve patient outcomes and decrease long-term costs9,48. Evaluating technical feasibility and clinical value are critical steps before integrating prediction models into routine care models32. From this perspective, our study paves the way for better resource optimization in mental healthcare and enabling the long-awaited shift in the mental health paradigm from reactive care (delivered in the emergency room) to preventative care (delivered in the community).

Methods

Study design and setting

This study comprised two phases. The first phase involved a retrospective cohort study designed to build and evaluate a mental health crisis prediction model reliant on EHR data. The second phase implemented this model in clinical practice as part of a prospective cohort study to explore the added value it provides in the clinical context. Added value was defined as the extent to which the predictive algorithm could support clinicians in managing caseload priorities and mitigating the risk of crisis.

The retrospective and prospective studies were both conducted at Birmingham and Solihull Mental Health NHS Foundation Trust (BSMHFT). One of the largest mental health trusts in the UK, BSMHFT operates over 40 sites and serves a culturally and socially diverse population of over 1 million patients. The retrospective study used data collected between September 2012 and November 2018; the prospective study began on 26 November 2018 and ran until 12 May 2019.

Ethical approval and consent

The Health Research Authority (HRA) approved the study. The HRA ensures that all NHS research governance requirements are met and that patients and public interests are protected. For the historical data used in the retrospective study, the need to obtain consent was waived on the basis of the use of anonymized data that cannot be linked to any individual patient. Furthermore, the consent form that had already been signed by patients upon joining the corresponding mental health service within the NHS included the potential purpose of using patient records for predictive risk analyses. Meanwhile, the participants in the prospective study were the healthcare staff members who consented to participation in the research and who had been trained in the use of the algorithm and its outputs in support of their clinical practice.

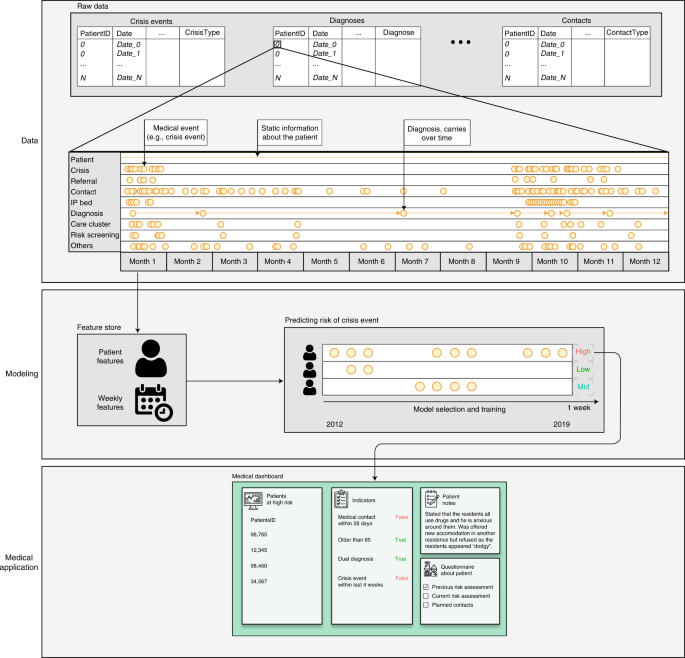

Dataset

The dataset comprised anonymized clinical records extracted from a retrospective cohort of patients who had been admitted to BSMHFT. The data included demographic information, hospital contact details, referrals, diagnoses, hospitalizations, risk and well-being assessments and crisis events for all inpatients and outpatients. No exclusion criteria based on age or diagnosed disorder were applied, meaning that patient age ranged from 16 to 102 years and that a wide range of disorders was included. However, to include only patients with a history of relapse, patients who had no crisis episode in their records were excluded. This decision was made because detecting first crises and detecting relapse events correspond to different ground truth labels and different data. Furthermore, given that detecting relapse events can leverage information about the previous crisis, patients with only one crisis episode were excluded because their records were not suitable for the training and testing phases. Additionally, patients with three or fewer months of records in the system were excluded because their historical data were insufficient for the algorithm to learn from. For the remaining patients, predictions were queried and evaluated for the period after two crisis episodes and after having the first record at least 3 months before querying the model. This produced a total of 5,816,586 electronic records from 17,122 patients in the database used for this study. Supplementary Table 1 breaks down the number of records per type, and Supplementary Table 2 compares the representation of different ethnic groups and genders in the study cohort, the original hospital cohort and the Birmingham and Solihull area.

Features and labels generation

With the exception of the static information, all EHR data included the associated date and time. The date and time refer to the moment when the specific event or assessment occurred—that is, the date and time that a patient was admitted to hospital or assigned a diagnosis. To prepare the data for the modeling task, each patient’s records were consolidated at a weekly level according to the date associated with the record. Following this process, we generated evenly spaced time series for each patient that spanned from the patient’s first interaction with the hospital to the study’s final week. The features and labels generated for each week were computed using the data with a date prior to that week. Static data susceptible to change over time (for example, marital status) were removed to mitigate the risk of retrospective leakage.

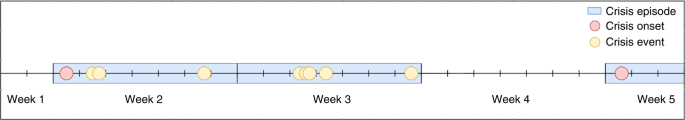

Label generation

To construct the binary prediction target, each patient-week was assigned a positive label whenever there was a relapse during the following 4 weeks (if the patient had not had a crisis during the current week) and a negative label otherwise. To assess the extent to which the model was sensitive to such a definition of the main label, we built 47 additional labels by varying three parameters:

The number of stable weeks (without crisis) necessary to consider a crisis episode concluded: from 1 to 4 weeks.

The prediction time window length (that is, the time window in which the algorithm assesses the risk of crisis): from 1 to 4 weeks.

The number of weeks between the time of querying the algorithm and the start of the prediction time window: from 0 to 2 weeks.

Features generation

We extracted a total of 198 features from the ten data tables (Supplementary Table 5). Each data table was processed separately, and no imputation that could add noise to the data was performed. Feature extraction was performed according to six procedures:

Static or semi-static features. Demographics data were represented as constant values attributed to each patient, with age treated as a special case that changed each year.

Diagnosis features. Patients were assigned their latest valid diagnosed disorder or a ‘non-diagnosed’ label and then separated into diagnostic groups according to the latest valid diagnosed disorder at the last week of the training set to avoid leakage into the validation and test sets. Each diagnosed disorder was mapped to its corresponding first-level category according to the ICD-10 (ref. 34) code system. For instance, F200 paranoid schizophrenia disorder was mapped to the F2 Schizophrenia and Psychotic category. We shortened the names of the first-level ICD-10 categories for brevity and to improve figure layouts:

F0 Organic: organic, including symptomatic, mental disorders (ICD-10 codes F00–F09).

F1 Substance Misuse: mental and behavioral disorders caused by psychoactive substance use (ICD-10 codes F10–F19).

F2 Schizophrenia and Psychotic: schizophrenia and schizotypal and delusional disorders (ICD-10 codes F20–F29).

F3 Mood: mood (affective) disorders (ICD-10 codes F30–F39).

F4 Neurotic, Stress and Anxiety: neurotic, stress-related and somatoform disorders (ICD-10 codes F40–49).

F6 Personality and Behavior: disorders of adult personality and behavior (ICD-10 codes F60–69).

Other Diagnosis: any other disorder not contemplated by the previous categories (ICD-10 codes F50–59 and F70–99).

Not Diagnosed: no diagnosed disorder available in the EHR.

EHR weekly aggregations. EHRs related to patient–hospital interactions were aggregated on a weekly basis for each patient. The resulting features constituted counts per type of interaction, one-hot encoded according to their categorization. If a specific type of event did not occur in a given week, a value of ‘0’ was assigned to the feature related to the corresponding type of event for the corresponding week.

Time-elapsed features. At each patient-week, for each type of interaction and category, we constructed a feature that counted the number of weeks elapsed since the last occurrence of the corresponding event. If the patient had never experienced such an event type up to that point in time, NaN values were used.

Last crisis episode descriptors. For each crisis episode, a set of descriptors summarizing the length and severity of the crisis episode was built. These descriptors were used to build features for the subsequent weeks until the next crisis occurred. If the patient had never had a crisis episode up to that point in time, NaN values were used.

Status features. For specific EHRs that are characterized by the start–end date, features for the corresponding weeks were built by assigning their corresponding value (or category); otherwise, they were set to NaN.

In addition to EHR-based features, we also added the week number (of a year, 1–52) to account for seasonality effects. Given the cyclical nature of the feature, we encoded the information using the trigonometric transformations sine and cosine: sin() and cos().

Crisis prediction modeling and evaluation

We defined the crisis prediction task as a binary classification problem to be performed on a weekly basis. For each week, the model predicts the risk of crisis onset during the upcoming 28 days. Applying a rolling window approach allows for a periodic update of the predicted risk by incorporating the newly available data (or the absence of it) at the beginning of each week. This approach is very common in settings where the predictions are used in real time and when the data are updated continuously, such as for predicting circulatory failure or sepsis intensive care units13,14.

We applied a time-based 80%/10%/10% training/validation/test split:

Training data started in the first week of September 2012 and ended in the last week of December 2017.

Validation data started in the first week of January 2018 and ended in the last week of June 2018.

Test data started in the first week of July 2018 and ended in the third week of November 2018.

Performance evaluations were conducted on a weekly basis, and each week’s results were used to build CIs on the evaluated metrics. All reported results were computed using the test set if not otherwise indicated.

Machine learning classifiers

For our final models, we used XGBoost49, an implementation of gradient boosting machines (GBMs)50, and the best-performing algorithm. GBMs are algorithms that build a sequence of decision trees such that every new tree improves upon the performance of previous iterations. Given that XGBoost effectively handles missing data and is not sensitive to scaling factors, no imputation or scaling techniques were applied. For comparison, we also evaluated the performance of some state-of-the-art machine learning classifiers, including logistic regression, naive Bayes, random forest, isolation forest and neural networks (namely, multi-layer perceptron and long short-term memory recurrent neural networks, which have been used successfully in similar prediction studies based on EHR51). To ensure a fair comparison, standard scaling and imputation of missing values were performed for the classifiers that typically benefit from these procedures. We also performed 100 hyperparameter optimization trials for each classifier to identify the best hyperparameters. The search spaces are included in the Supplementary Materials (Supplementary Table 8).

Hyperparameter tuning and feature selection

To select the optimal hyperparameters for the trained models, we maximized AUROC based on the validation set using a Bayesian optimization technique. For this purpose, we used Hyperopt52, a sequential model-based optimization algorithm that performs Bayesian optimization via the Tree-structured Parzen Estimator53. This technique has a wide range of distributions available to accommodate most search spaces. Such flexibility makes the algorithm very powerful and appropriate for performing hyperparameter tuning on all of the classifiers used. The same methodology was used for feature selection. To that end, we grouped the features into categories based on the information gained and added a binary parameter assessing whether a particular feature should be selected (Supplementary Table 5).

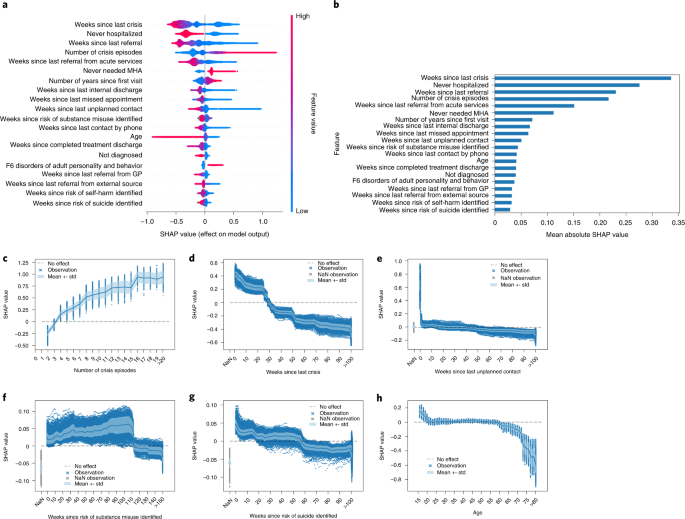

Model interpretation

We used SHAP values to measure the contribution that each feature made to the main model42. This technique is based on the Shapley value from game theory, which quantifies the individual contributions of all the participants of a game to the outcome and represents the state-of-the-art approach to interpreting machine learning models. SHAP values were computed using the Python package shap, version 0.35.0, and the TreeExplainer algorithm, an additive feature attribution method that satisfies the properties of local accuracy, consistency and allowance for missing data54. Feature attributions are computed for every particular prediction, assigning each feature an importance score that considers interactions with the remaining features. The resulting SHAP values provide an overview of the feature’s contribution based on its value and allow for both local and global interpretation. All SHAP values were computed from the test set.

To further evaluate the stability of the model and its interpretation, we conducted an experiment in which we generated 100 different samples by randomly selecting 40% of the patients per sample. We trained a model for each of the 100 samples and computed the SHAP values for the whole test set. The consistency of the most important predictors was evaluated through the cosine similarity between the SHAP values of the top 20 features of the final model and the models trained on each of the 100 samples. The results (presented in Supplementary Materials–Stability of Most Predictive Features) were consistent with the analysis of the general model.

Statistical methods

If not otherwise indicated, all reported metrics in text, tables and figures refer to the performance evaluation on the test set. CIs for the reported performance metrics were computed using n = 25 temporal splits. Statistical analysis for model comparison was conducted based on the AUROC and its equivalence to the Mann–Whitney U-statistic and following the theory surrounding using generalized U-statistics to compare correlated ROC curves55. The two-stage step-up method of Benjamini, Krieger and Yekutieli56 was used to correct the P values of the multiple tests performed. For figures showing curves (Figs. 3a,b and 4c–h, Extended Data Fig. 6c and Supplementary Fig. 1), solid lines and shaded areas correspond to the means and standard deviations of the performance metrics across the temporal splits in the test set. For figures featuring point plots (Fig. 3d–f and Extended Data Fig. 8a–f), center points and vertical bars correspond to the means and 95% CIs across the temporal splits in the test set. For box plot figures (Fig. 3c and Extended Data Fig. 7a–c), the solid line corresponds to the median value; the box limits correspond to the first (left limit) and third (right limit) quartiles; the whiskers denote the rest of the distribution range from Q1–1.5 (Q3–Q1) (left whisker) to Q3 + 1.5 (Q3–Q1) (right whisker); and the points displayed correspond to the outliers.

We evaluated the calibration of our proposed model and the model for each diagnosis, meaning that we compared the PRS of the model to the observed risk aggregating the observed labels. To calibrate the risk scores, we fitted an isotonic regression model38 to the validation set’s predictions and transformed the test set’s predictions. Consequently, the transformation applied to the PRS preserves the rank and minimizes the deviation between the actual target variable and the final PRS. We used 25 evenly spaced bins on the PRS to generate the calibration curve in Extended Data Fig. 6a,b

Clinical evaluation

Participants

A total of 60 clinicians from four CMHTs participated in the study. Four were doctors, two were occupational therapists, two were duty workers, one was a social worker and 51 were nurses, including clinical leads and team managers (see Table 1 for an overview of the CMHTs). Each team had at least two coordinators who served as the first contact point for their team and who were responsible for assigning individual cases to the participating clinical staff. The four CMHTs reviewed crisis predictions from a total number of 1,011 cases in a prospective manner as part of their regular clinical practice. Although the initial plan was to include 1,200 cases, 189 cases were discarded from the analysis due to an internal technical error. Crucially, this error did not affect the study results beyond slightly reducing the sample size.

Data collection

The general model, using the most recent available data, was applied on a biweekly basis to generate the PRS for all patients. Patients were ranked, and each CMHT received a list of the 25 patients (belonging to their caseload) at greatest risk of crisis. The tool used by the participants contained a list of patient names and identifiers, risk scores and relevant clinical and demographic information (Supplementary Table 10).

Upon reviewing the list of patients, the CMHTs completed the F1 feedback form, which asked them to:

Provide their assessment of each patient’s crisis risk level and indicate agreement or disagreement with the algorithm-based prediction.

Specify their intended action in response to each prediction.

One week after the initial review, the CMHTs completed the F2 feedback form, which asked them to:

Provide each patient’s crisis risk level, based on further assessment, and indicate whether the tool had influenced them to change their previous assessment.

Indicate whether the algorithm-based predictions contributed valuably to managing caseload priority or mitigating the risk of crisis (due to early identification of symptomatic deterioration, enabling them to provide support or attempt to prevent a crisis).

Finally, five staff members (three community psychiatric nurses, one psychiatrist and one team manager) were individually interviewed and responded to a set of open-ended questions that concerned the added value of the crisis prediction model, its implementation and the facilitators and barriers to its use in practice. The interviews were conducted 5 months after the start of the study to sufficiently expose participants to the crisis prediction algorithm (see Supplementary Materials–Qualitative Evaluation for the interview reports).

Reporting Summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

EHRs that support this study’s findings contain highly sensitive information about vulnerable populations and, therefore, cannot be made publicly available. Any request to access the data will need to be reviewed and approved by the Birmingham and Solihull Mental Health NHS Foundation Trustʼs Information Governance Committee.